by Prcrstntr

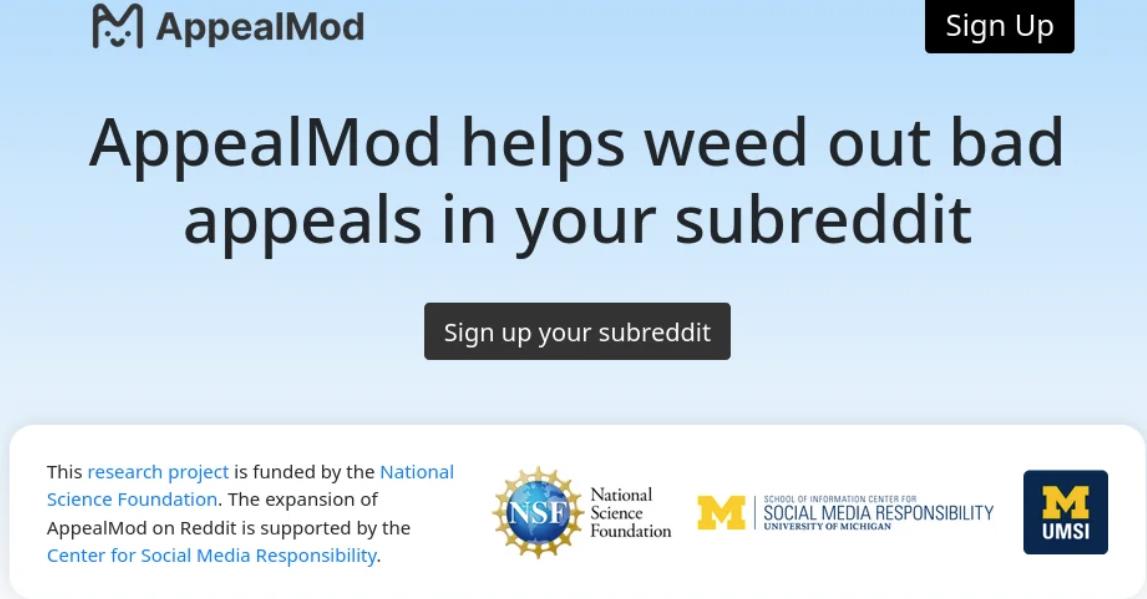

The United States Government helps fund censorship on reddit through The University of Michigan Center for Social Media Responsibility (CSMR)

NSF grant:

https://www.nsf.gov/awardsearch/showAward?AWD_ID=1928434&HistoricalAwards=false

Funds Obligated to Date

FY 2019 = $347,685.00

FY 2023 = $69,354.00

Research paper:

https://arxiv.org/abs/2301.07163

Abstract: Around the world, users of social media platforms generate millions of comments, videos, and photos per day. Within this content is dangerous material such as child pornography, sex trafficking, and terrorist propaganda. Though platforms leverage algorithmic systems to facilitate detection and removal of problematic content, decisions about whether to remove content, whether it’s as benign as an off-topic comment or as dangerous as self-harm or abuse videos, are often made by humans. Companies are hiring moderators by the thousands and tens of thousands work as volunteer moderators. This work involves economic, emotional, and often physical safety risks. With social media content moderation as the focus of work and the content moderators as the workers, this project facilitates the human-technology partnership by designing new technologies to augment moderator performance. The project will improve moderators’ quality of life, augment their capabilities, and help society understand how moderation decisions are made and how to support the workers who help keep the internet open and enjoyable. These advances will enable moderation efforts to keep pace with user-generated content and ensure that problematic content does not overwhelm internet users. The project includes outreach and engagement activities with academic, industry, policy-makers, and the public that ensure the project’s findings and tools support broad stakeholders impacted by user-generated content and its moderation.

Specifically, the project involves five main research objectives that will be met through qualitative, historical, experimental, and computational research approaches. First, the project will improve understanding of human-in-the-loop decision making practices and mental models of moderation by conducting interviews and observations with moderators across different content domains. Second, it will assess the socioeconomic impact of technology-augmented moderation through industry personnel interviews. Third, the project will test interventions to decrease the emotional toll on human moderators and optimize their performance through a series of experiments utilizing theories of stress alleviation. Fourth, the project will design, develop, and test a suite of cognitive assistance tools for live streaming moderators. These tools will focus on removing easy decisions and helping moderators dynamically manage their emotional and cognitive capabilities. Finally, the project will employ a historical perspective to analyze companies’ content moderation policies to inform legal and platform policies.

This award reflects NSF’s statutory mission and has been deemed worthy of support through evaluation using the Foundation’s intellectual merit and broader impacts review criteria.

This is a single example, and one that was started before ChatGPT was around. I’m sure there are other examples.

The advent of LLM opens up unlimited possibilities. Before there used to be terms like “sentiment analysis” that the AI can now do more innately.

Eventually moderation on major platforms will just have every comment go into an AI, and ask “does this break any rules? If so, which one?” and the post is hidden. Keep in mind that things like election denial and certain COVID opinions were against the rules on many platforms. If there was a major social unrest, that would be added as well, to quell any rebellion.

For an example of something that could easily be “moderated” by future AI tools, take a look at any of the comments on one of the threads on the United Healthcare CEO that got assassinated today, in the near future those could all automatically be removed, because a robot deemed it as “antisocial behavior” of some form or another. Already most comments have a good chance of getting removed, but only because they trigger a dumb filter. The next filters will be smart.

The government will use similar tech to watch the internet, and as the pile of internet laws continues to grow, they will silently add new rules to an AI ruleslist all forums will eventually have to subscribe to. The tech will only improve, and things will only get worse.